In the interest of not burying the lede, the following line in OpenAI’s recent blog post on Codex turned my head and is why I wrote this post. Read on for a history of Codex and how we got here.

During testing, we've seen GPT‑5-Codex work independently for more than 7 hours at a time on large, complex tasks, iterating on its implementation, fixing test failures, and ultimately delivering a successful implementation.

I keep close tabs on the SOTA of Coding agents, really close. To be clear, I test - and am only concerned about capabilities on brownfield projects, not prototypes, not front-end only types of tasks you see on X and YouTube.

In the last few months the advancements have been fierce. Here’s the play by play, rewinding to 2022.

(rewinding sound)

Code Tab Completion Era (6/2022)

OpenAI commercializes Codex through GitHub Copilot’s initial beta launch. This was the very first code completion product ever, which subsequently became the first GA LLM based coding tool in June of 2022. I was gladly paying 10$ a month for this at the time.

https://github.blog/news-insights/product-news/github-copilot-is-generally-available-to-all-developers/

Code Problem Solving Era (3/2023)

Very quickly thereafter, OpenAI launched GPT-4, which took quite a while to get into GitHub copilot because it wasn’t a ‘Codex’ model - it seems OpenAI stopped or back-burnered the Codex project during that time. So coding with AI moved to copy/paste from ChatGPT.

…

Then came Cursor, the 800lb gorilla still, this was the Copilot killer, and still is a juggernaut. Then Windsurf, and other IDE forks.

You basically didn’t hear the term Codex anymore unless you were digging into their model list on the playground.

Early Agentic Coding Era (6/2024)

Enter Anthropic with the Sonnet 3.5 release. Why was this important?

Because this was the first Coding-focused foundation model ever released. It was the model card addendum that indicated that they had internally tested a coding loop. What a lot of context-engineering practitioners now call the ‘inner loop’.

Agentic coding history really starts with this release.

Almost immediately after reading this model card, the early version of Cline was released that took advantage of this inner loop and built an outer loop as a VSCode extension.

See my previous post about it here…

Why I Code with Cline

Remember when tab completion felt revolutionary? Those little suggestions that would pop up as you typed, helping you remember method names and API calls. Then came the era of AI code completion - GitHub Copilot and its contemporaries changed how we think about coding coding assistants.

Claude Code and Coding CLI Era (3/2025)

These two go hand-in-hand. Even though there had been CLI based AI coding assistants already - Aider is the OG - they just hadn’t captured or harnessed or were able to change their harness fast enough to adapt.

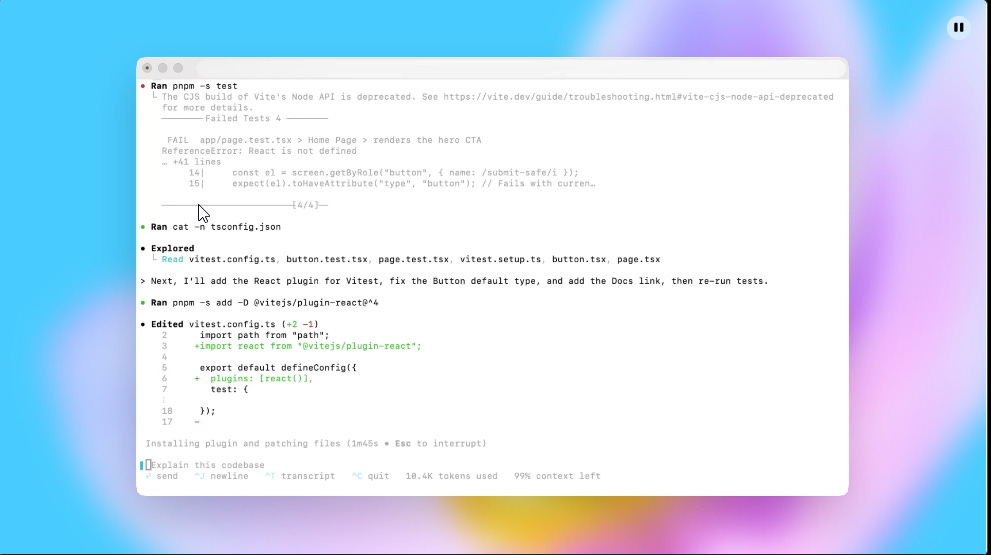

Until recently for me since its launch in March of this year, I’ve been exclusively working with Claude code and they have made amazing advancements with this tool, releasing new versions multiple times a day.

Most recently, with subagents, which created a much longer time-horizon tasks. The focus for me has been around managing the context-window and ensuring the guardrails are there with the teams I’m working with to prevent the currently, inevitable … splintering of focus that leads to dead-ends.

I have a curated set of subagents and commands specifically for this purpose in a new project I’ll be posting about called ‘mem8’. More to come on that soon. Others have published many useful subagents that break down the larger tasks.

Brand New GPT-5 Codex Era

OpenAI released a CLI called Codex in April of this year, it was horrible answer to Claude Code. It didn’t work well, but it was open source, unlike Claude Code, so I thought I’d keep my eye on it as new versions came out. It used Ink to render just like Claude code.

At this point my experience with Claude Code was great but the Ink rendering engine struggled and I believe it just needs to be replaced. I’ve seen Charm based Crush in action, but haven’t used it myself, but regardless, I knew there were going to be better experiences in the new ‘CLI Era’.

On May 30th, OpenAI announced on the codex discussion board that they were closing their TypeScript implementation for a new Rust based CLI.

Since their rust release, they seemed to have upped their game on this front, trying to get ahead of Claude Code. The better rendering engine and crisp reaction of rust is palpable. If you’ve used rust tools before, it’s one of those - IYKYK situations.

Due to this port from TypeScript to Rust, I had decided to give ‘Codex’ another shot.

Then 2 days ago, on September 17th, 2025 - they dropped the new GPT-5 Codex Model - for use inside the ‘codex’ CLI. Which is different than using the GPT-5 model inside of ‘codex’. I know, very confusing, which is why I wrote this article to help disambiguate the history a bit, and also to drive the point home.

The point?

If you want to be at the cutting edge of AI Coding - you need to look at codex with GPT-5 Codex model detailed in this release:

https://openai.com/index/introducing-upgrades-to-codex/

I'm listening. 👨💻

... and FWIW, I think Claude with Sonnet 4.5 took the throne back.